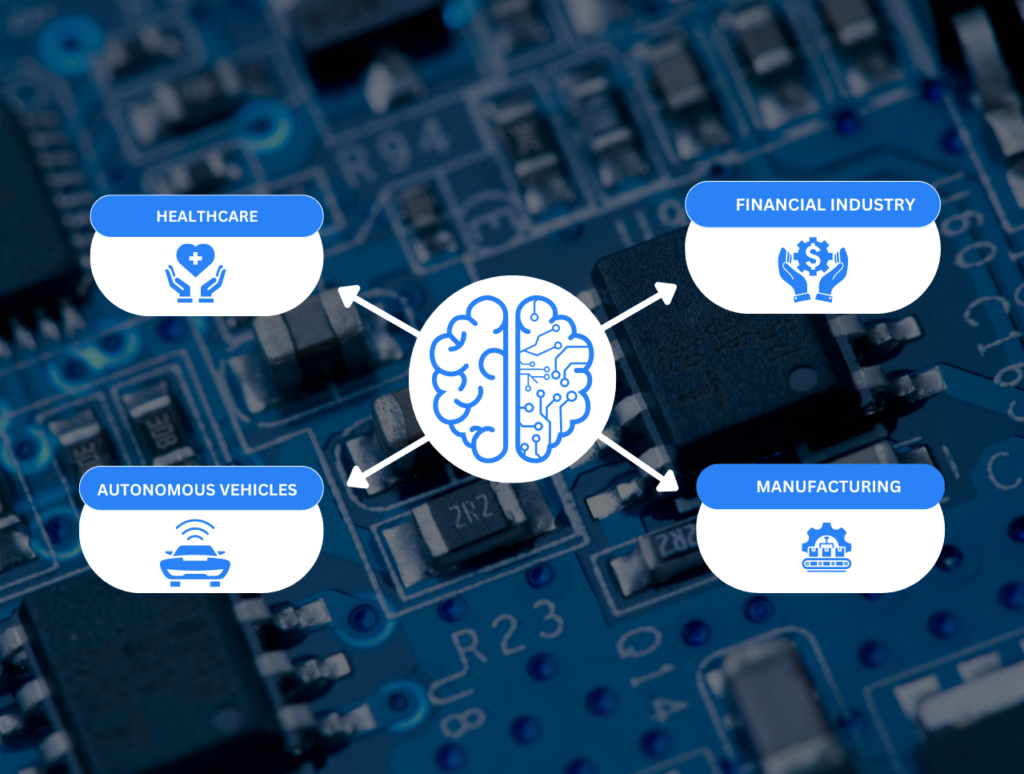

Applications of AI Agents in Various Industries

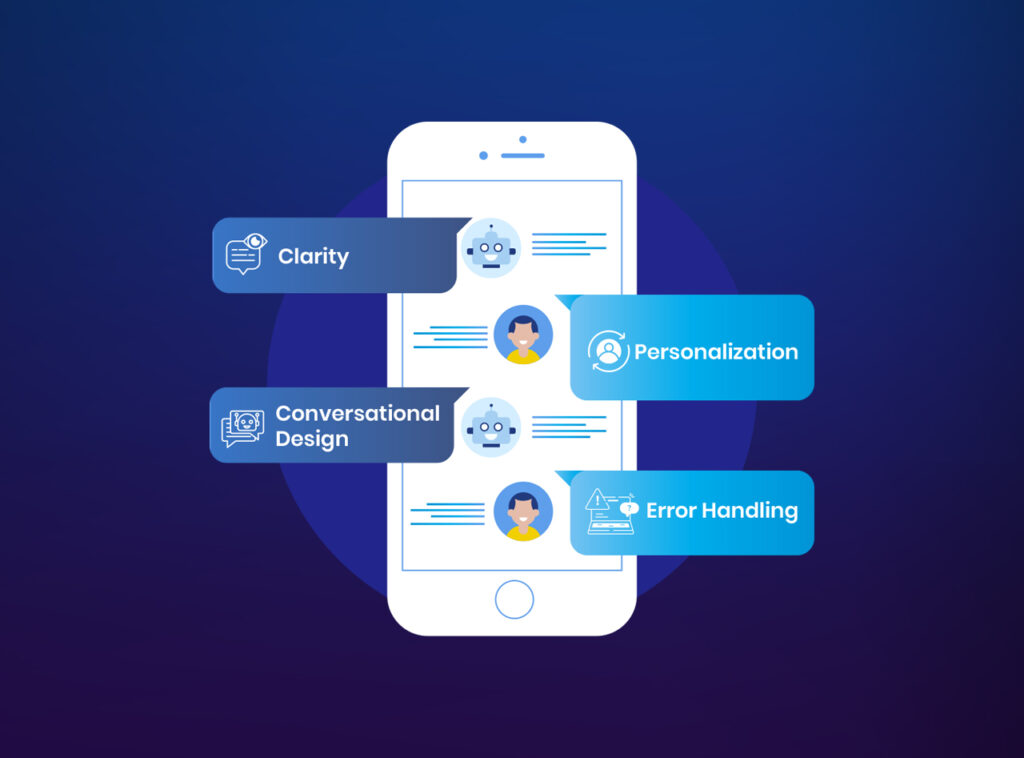

Artificial Intelligence (AI) is transforming industries across the globe, revolutionizing how businesses operate and deliver value to their customers. AI agents, in particular, are at the forefront of this transformation, automating tasks, enhancing decision-making processes, and improving customer experiences. In this blog, we will explore the applications of AI agents in various industries, providing insightful examples that demonstrate their impact and potential. What Are AI Agents? AI agents are software programs or systems designed to perform specific tasks by simulating human intelligence. They can perceive their environment, process information, and take actions to achieve predefined goals. These agents utilize machine learning algorithms, natural language processing, and other AI technologies to analyze data, make decisions, and interact with users or other systems. The versatility of AI agents allows them to be applied in various fields, from customer service chatbots to complex predictive analytics systems. Healthcare Personalized Treatment Plans AI agents in healthcare can analyze vast amounts of patient data to create personalized treatment plans. By considering genetic information, lifestyle choices, and previous medical history, AI can recommend treatments tailored to individual patients, improving outcomes and reducing adverse effects. Example: Tata Memorial Centre in Mumbai uses IBM Watson for Oncology to assist oncologists in diagnosing and creating treatment plans for cancer patients. This AI system analyzes medical literature and patient data to provide evidence-based treatment recommendations. Predictive Analytics for Disease Prevention AI agents can predict disease outbreaks and identify patients at risk of developing chronic conditions by analyzing data from wearable devices, electronic health records, and other sources. This proactive approach enables early intervention and preventive care. Example: Predible Health, a Bengaluru-based startup, uses AI to provide predictive analytics for disease prevention, focusing on early detection of conditions like liver and lung diseases. Finance Fraud Detection AI agents in the finance industry are highly effective at detecting fraudulent activities. By analyzing transaction patterns and identifying anomalies, AI can flag suspicious activities in real time, protecting both customers and financial institutions. Example: HDFC Bank uses AI-powered systems to detect fraudulent transactions. Its AI system analyzes millions of transactions per day, identifying potentially fraudulent activities with high accuracy. Algorithmic Trading AI agents are also transforming trading by executing high-frequency trades based on market data analysis. These algorithms can process information faster than human traders, making split-second decisions that can capitalize on market opportunities. Example: Zerodha, one of India’s largest stock trading platforms, leverages AI algorithms for better market predictions and trading strategies. Retail Personalized Shopping Experiences AI agents in retail create personalized shopping experiences by analyzing customer data, including browsing history, purchase patterns, and preferences. This allows retailers to recommend products and offer tailored promotions. Example: Flipkart uses AI to enhance its recommendation engine, suggesting products to customers based on their browsing and purchase history, significantly improving the user experience and driving sales. Inventory Management AI agents optimize inventory management by predicting demand and automating replenishment processes. This reduces overstock and stockouts, ensuring that products are available when customers need them. Example: Reliance Retail uses AI for inventory management, analyzing sales data and predicting trends to ensure that its stores are stocked with the right products at the right time. Manufacturing Predictive Maintenance In manufacturing, AI agents can predict equipment failures before they occur by analyzing data from sensors and other monitoring devices. This predictive maintenance approach reduces downtime and maintenance costs while improving operational efficiency. Example: Tata Steel uses AI to predict when industrial machinery will need maintenance, helping to prevent costly breakdowns and extend the lifespan of equipment. Quality Control AI agents enhance quality control processes by identifying defects in products during the manufacturing process. By analyzing images and other data, AI can detect flaws that human inspectors might miss. Example: Mahindra & Mahindra employs AI for quality control in its automotive manufacturing plants, using computer vision to inspect components and ensure they meet quality standards. Education Personalized Learning AI agents in education provide personalized learning experiences by adapting content to the needs and progress of individual students. This ensures that learners receive the support they need to succeed. Example: BYJU’S, a leading Indian edtech company, uses AI to personalize lessons for users, adjusting the difficulty based on their performance and providing targeted practice to improve learning outcomes. Administrative Automation AI agents streamline administrative tasks such as grading, scheduling, and student enrollment, freeing up educators to focus on teaching and mentoring. Example: Amity University uses an AI chatbot to assist with administrative tasks, such as answering student queries and helping with enrollment processes, improving student engagement and satisfaction. Transportation Autonomous Vehicles AI agents are driving the development of autonomous vehicles, which have the potential to revolutionize transportation by reducing accidents, improving traffic flow, and enhancing mobility for those unable to drive. Example: Tata Elxsi is working on AI-driven autonomous vehicle technology, leveraging AI to enable semi-autonomous driving and improve safety on Indian roads. Fleet Management AI agents optimize fleet management by analyzing data on vehicle performance, fuel consumption, and route efficiency. This helps companies reduce costs, improve delivery times, and enhance overall efficiency. Example: Rivigo, a logistics company in India, uses AI to optimize its delivery routes, reducing fuel consumption and improving delivery efficiency through its innovative logistics solutions. AI agents are transforming industries by automating tasks, enhancing decision-making, and improving customer experiences. From healthcare and finance to retail and manufacturing, the applications of AI are vast and varied. As technology continues to advance, the potential for AI agents to drive innovation and efficiency in even more sectors will only grow. For companies looking to stay competitive in an increasingly digital world, embracing AI agents is not just an option but a necessity. The examples provided in this blog demonstrate just a fraction of what is possible, and the future promises even more exciting developments. If you are interested in leveraging AI agents to transform your business, contact us at Nuclay Solutions to learn how we can help you stay ahead of the curve. Ready to transform your business with AI?